|

|

|

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||

What the driver’s eyes tell the car’s brain.

Andrew Liu, Ph.D., Research Scientist |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Introduction |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Part1:

|

| 1970 | 1980 | 1990 | 1995 | 1996 | 1997 | 1998 | 1999 | 2000 | 2001 | |

| Fatalities | 52627 | 51091 | 44599 | 41817 | 42065 | 42013 | 41501 | 41717 | 41945 | 42116 |

| Injuries (millions) | 3.230 | 3.465 | 3.483 | 3.348 | 3.192 | 3.236 | 3.189 | 3.033 | ||

| Crashes (millions) | 6.471 | 6.699 | 6.770 | 6.624 | 6.335 | 6.279 | 6.394 | 6.323 | ||

| Vehicle-miles (trillions) | 1.110 | 1.527 | 2.144 | 2.423 | 2.486 | 2.562 | 2.632 | 2.691 | 2.747 | 2.781 |

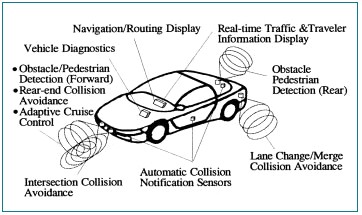

Future IVI technologies

From the US DOT Intelligent Vehicle Initiative- Rear-end Collision Avoidance

- Lane Change/Merge Collision Avoidance

- Road Departure Collision Avoidance

- Intersection Collision Avoidance

- Vision Enhancement

- Vehicle Stability

- Driver Condition Warning

- Driver Distraction and Workload

Their web sites are reasonably good sources of information on what Federal Highway Administratoin (FHWA) are interested in keeping tabs on.

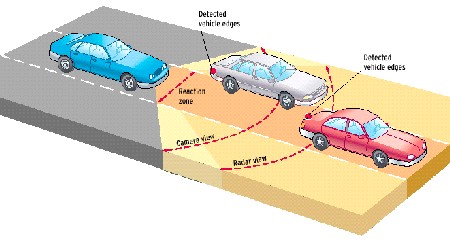

Safety systems – Adaptive cruise control

|

Following the leader The Fujitsu Ten system keeps a safe distance behind cars in its lane [reaction zone] by combining radar data on distance with stereo-camera data on the size of the objects. The camera derives the width of cars by detecting their edges [red dots, and yellow boxes in the photo]. Objects that are too wide, like a bridge abutment, are ignored. The system's wide field of view allows it to continue tracking vehicles around curves.  From Jones (2001). |

The second category of technology is safety systems. These systems, in general, are active. That is, they take over some of the control functions from the driver for varying periods of time. The research on these systems is often known as Intelligent Vehicle systems, advanced safety systems, ITS.

This example is a newer form of cruise control. It has been introduced, but I think mainly in Japan and possibly Europe. The idea is to try and maintain a set speed but also a safe distance behind a leading car. There isn’t much uniformity in the algorithms (which goal has more weight, what are the operating criteria?) so that just adds to the human factors problems right now.

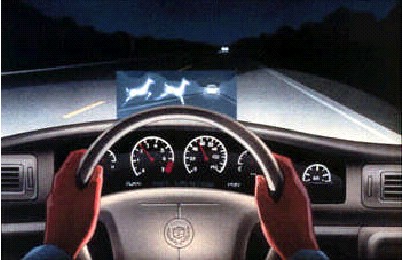

Safety systems – Vision enhancement

Cadillac Night Vision system

This is a newly introduced commercial system and one of a rare number of uses for a head-up display (HUD) in a car. What are the potential problems here? Lots of other heat sources could interfere with detection of true obstacles – such as recently parked vehicles. What about other lights in a crowded urban setting? Then there are potential issues with using a HUD.

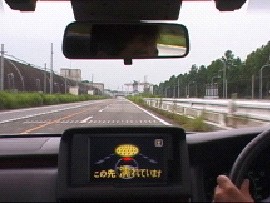

Nissan ASV – Road surface detection. Support system for using road surface condition information; advising the driver on a wet road surface ahead.

This system uses video technology to preview the condition of the road surface ahead. Could be quite useful in New England and other cold areas if it could detect patches of black ice (of course,the driver has to take the appropriate action!)

Adaptable vehicle dynamics

The Vehicle Dynamics Control (VDC) system prevents a vehicle from spinning when large incipient oversteer occurs during a lane change on a slippery road surface. It does this by regulating the engine output according to the degree of oversteer detected and also by controlling the breaking force at all four wheels so as to reduce oversteer and maintain driving stability.

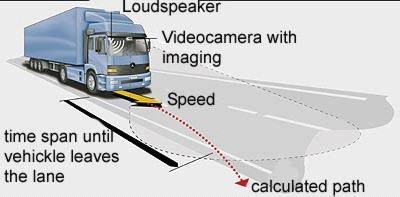

Safety systems – Road departure

This type of technology has been used by the NAVLAB group at Carnegie-Mellon to construct a fully autonomous car that drove most of the way across the US in 1999.

This is relatively old technology as far these systems are concerned – probably been around for 10 years. It is designed to keep sleepy or non-attentive drivers from getting out of their lane. Usually based on video technology, looking at the stripes on the road and predicting the car’s path/deviation from the lane. Now there’s a big problem for the Boston area (like Rt. 128 which has no lines in the winter!)

More recently these systems have been coupled with active steering instead of just some sort of warning. Either imitating rumble strips on the road or actually steering the car back into the lane. Brings up some interesting problems of coordinating control with the driver. (How does it know about lane changing?)

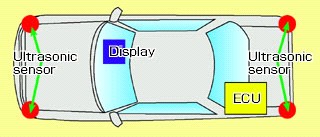

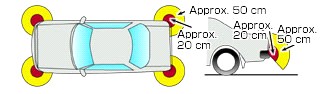

Safety systems – Blind spot systems

- At close range (ultrasonic):

Detect the corners of the vehicle during parking or maneuvering through tight spaces - At longer ranges (radar):

Detect other vehicles that are in the driver’s “blind spots”

Telematics - Information

Advanced Information and Communication Systems

OnStar system. Handsets used 1996-99 and on-dash buttons 1999-

The third category is telematics. On the road electronics devices or services that provide navigation, traffic, weather, etc. information, communications capabilities, etc. The OnStar system is being advertised these days. The OnStar system can be used in case of accidents or for route and traffic information, concierge services (hotel and restaurant reservations), navigation. Good to see that they have thought about human factors by changing to a simple, less distracting input system.

Obviously, there are numerous issues dealing with data input methods (converging on voice these days) and information display (clutter, distraction for the driver). The ubiquitous cell phone is also shown.

Telematics - Entertainment

Chrysler PT Cruiser Multi-function display

Panasonic in-car DVD system

Entertainment telematics is one of the hot areas under development. Here is a sampling of what could be available. Microsoft and Clarion introduced the AutoPC a few years back, but it is a really small interface and device, and hasn’t seemed to really catch on. Of course, there will probably always be people who will plug a laptop into their car’s Ethenet and browse while they drive…

Movies and DVDs are gaining popularity as a way to keep the kids occupied in the back. Surprisingly, there are several attempts to get these displays into the front seats as well. For a while in Japan, some manufacturers were proposing TV and movies (DVD now, I guess) to have a display just for the front seat passenger. I don’t have a whole lot of confidence that the driver would remain distraction free unless the movie was a real dog! Within the last two or three years, I believe that Japan has enacted laws against such after market systems.

A cautionary note ...

Anti-lock braking systems (ABS)- Since ABS improved test track braking performance, it was assumed it would also lower crash risk in actual driving conditions.

- Instead, it was found that crash risk was redistributed across different accident types.

- Lower risk of crash on wet pavement or with a leading vehicle.

- Increased risk for rollover crash and being rear-ended

- Crash severity was also higher in ABS vehicle accidents!

There is also some evidence that crash severity was higher, indicating that people are driving with higher speeds (altered behavior due to changed perception of risk).

Interacting with multiple systems

- The future car will likely have many systems operating at once.

- Drivers have multiple mental models of driving tasks but only one active at any time.

- How does the car determine the active model to provide the right information?

Intentions as a context for interaction

Integrating new safety systems

Managing multiple telematics systems

So, the driver’s internal (or intentional) state provides a context then for interacting or managing the automation. Depending on the circumstances, drivers have certain expectations about how the car should operate or what information they need.

Example 1 - Integration with Intelligent and “Active” control and warning systems. If a driver wants to pass another car, his/her car can alternatively turn on a “blind-spot” detector or change engine and transmission characteristics to improve safety and performance. If not in context to pass, then warnings remain silent, or no action taken.

Example 2 - Management of information and communication devices. Knowledge of intentions gives some answers as to WHEN these displays should be on or, depending on the information, WHAT should be displayed.

Case in point - telephones, if system detects the driver’s state as busy, then may answer and hold the line or take message.

Recognizing intentions - A thought experiment

Click to start video

So how to recognize or predict driver intentions since they are mental states, so not directly observable? Try this thought experiment. Imagine that you are in the front passenger seat and observing the driver, or if you are the more secretive type, you have some video cameras in the car looking remotely at the driver. Do you think it is possible to tell what the driver is doing from the behavior that you observe?

Most people seem to think that it is possible. In this case, we can see the driver turning the steering wheel, moving their head, shifting gears, or even stepping on pedals.

So our approach assumes that by observing the behavior, such as how they control the car’s heading, acceleration, or velocity, we can indirectly estimate the state of the driver.

Making predictions ... - What can be observed?

- State variables of the vehicle

- Control of velocity, acceleration, steering

- Eye/head movements

- Fixation or gaze location

- Head movement is a more gross measure

- Driver Emotional/Physical State

- Traffic Environment State

- Traffic constraints

- Roadway constraints

Another is the driver’s head or eye movements. An advantage with regards to driving is that since the driver can look ahead of the car and preview the scene, they tend to precede the actual control movements. Research in eye movements shows that there seem to be characteristic eye movements that people make according to their cognitive state. I’ll show a couple of examples of characteristic eye movement patterns from driving in a moment.

Why use eye movements?

- Eye movements are observable actions of a “cognitive state machine” and patterns of eye movements occur depending on state.

- Vision/eye movements provide extra margin for prediction of upcoming behaviors since they tend to precede control actions.

- Environmental conditions can affect EMs

- e.g., time of day, weather, traffic

- The Human Element

- They are an observable reflection of the cognitive state that the person is in – that is they reflect the intentions of the driver to act, collect information, check predictions, etc.

- They tend to precede actual control actions by the driver. A clear example is the fact that drivers tend to look ahead of the car, either for controlling it around a curve or obstacle, or to detect potential obstacles or hazardous situations.

The human element

- Individual differences between drivers

- Cultural

- Experience

- Training

- Generally low, except for professional truck drivers.

- Very low compared to pilots, plant operators.

- Changes within individual drivers

- Driving experience

- Age effects

- Emotional states

- Physiological states

Also, unlike the pilots, I would generally classify drivers as not highly trained, especially the newer drivers. It doesn’t really take much to get a license in some places other than paying your money and being able to start the car! This situation is more similar to predictive systems in computing. Professional truck drivers are probably an exception to this, and that’s probably why technology is often introduced to this group first. For the rest of us, knowing that our own driving behavior is helping to guide the operation of the car will also alter behavior in some manner.

The bottom line is that any sort of system will have to adapt itself to changes in the driver.

Part 2: What’s going on at the driver’s eyes?

Part 3: What’s going on in the car’s brain?

References

© 2003 • contact Matthias Roetting • last revision December 11th, 2003